Head on over to your settings and turn on that English dub. We’ve got your guide to all things dub-related.

Dubbing in film and TV has opened the door for endless possibilities. It can allow a project to expand its reach across the world or patch up the holes that would otherwise sink the entire project into oblivion.

No matter how dubbing is used, we can agree that it is a post-production tool that can breathe life into a film or TV series. Streaming has allowed foreign content like Money Heist, M.F.K.Z, and Dark to rise to a level of popularity that might have not been possible without dubbing. Dubs offer shows in a wide range of different languages for those who don’t want subtitles or can’t read them.

While the debate for subtitles vs. dubbing rages on, dubbing is here to stay. Understanding the history of dubbing in filmmaking and why many filmmakers still use the post-production tool will make you favor dubs over subs. Let’s break down the importance of dubbing and how to construct a good dub in your next project.

Table of Contents

What Is Dubbing?

Dubbing is the process of adding new dialogue or other sounds to the audio track of a project that has already been filmed. The term “dubbing” came from doubling either the Vitaphone sound disc to synchronize sound with a motion picture or from doubling an actor’s voice to films in the beginnings of the sound era.

Dubbing is typically used to translate foreign-language films into the audience’s language of choice. Foreign language films are translated from the project’s original dialogue, and the translator carefully decides what words to use based on lip movement, tone, and script.

There are two types of foreign language dubbing: animation dubbing and live-action dubbing. Animation dubbing allows the voice actors to have more freedom with their performances because animated faces are not as nuanced as human faces. Live-action dubbing is an imitation of the original performer’s acting, but with a different voice. It is more constraining, limiting the freedom of the voice actor’s performance.

An editor might also dub the audio when the original audio from filming isn’t usable. Dubbing allows the filmmaker to obtain high-quality dialogue regardless of the actual conditions that existed during shooting.

Several other reasons dubbing is used are to add in voice-over narration, sound effects to the original soundtrack, or substitute musical numbers for a more pleasing voice before filming the musical numbers. The new audio must be mixed with the other audio tracks so that the dubbing doesn’t become distracting to an audience.

The History of Dubbing

Dubbing came to exist due to the limitations of sound-on-film in the early days of cinema. In 1895, Thomas Edison experimented with synchronizing sound-on-film with his Kinetophone, a machine that synchronized a kinetoscope and a phonograph to produce the illusion of motion accompanied by sounds, but the lack of amplification led to the Kinetophone’s failure.

Gaumont’s Chronophone and Nolan’s Cameraphone followed Edison’s inventions, but it wouldn’t be until 1923 when inventor, Lee De Forest, unveiled his Phonofilm, the first viable optical sound-on-film technology.

Unfortunately, Bell Labs and its subsidiary Western Electric developed a 16-inch shellac disc revolving at 33.3 RPM that recorded 9 minutes of sound which outperformed the Phonofilm in sound quality, and Warner Bros. decided to move forward with the industrial giant. This machine known as the Vitaphone created a significantly better signal-to-noise ratio over the consumer standard 78 RPM. The Vitaphone debuted in 1925 at a packed auditorium in New York and San Francisco, where a recording of President Harding’s speech was played.

From that moment on, the search for technology to blend sound into motion pictures was on.

By 1926, Warner Bros. had acquired an exclusive license for the Vitaphone sound-on-disc system, and released their first talking film, Don Juan, later that same year. George Groves used the Vitaphone to record the soundtrack that would play along with the film and became the first music mixer in film history by doing so.

The Vitaphone Credit: Olney Theatre

The following year, The Jazz Singer was released, becoming the first feature-length “talkie.” As soon as audiences heard the line, “You ain’t heard nothing yet,” the silent movie era was essentially dead. That line was one of four talking segments in the film, but the door had been opened to the possibilities of sound in film.

As cinema began transitioning from the silent film era to sound, the fight to have clean and clear dialogue was on. Dubbing, as we know it, started around 1930 with films like Rouben Mamoulian’s Applause pioneering sound mixing in film. Mamoulian experimented with editing all the sounds on two interlocked 35mm tracks which began the standard film tracklaying/dubbing practice. More and more films started to record actors’ dialogue after shooting scenes, then synchronizing the dialogue to the scene. There were cases of other actors being hired to do the voices of stars who refused to reshoot their silent scenes like the case of Louise Brooks, who starred in The Canary Murder Case.

In the early 30s, the standard dubbing prep was to lay dialogue on one track, leaving three tracks to be shared between music and sound effects. The achievement of dubbing allowed artists like Fred Astaire to pre-record his tap steps exactly as he would dance them in his films. In the 1940s, Disney’s Fantasia was recorded on a 9-track Omni-directional FantaSound, but only four U.S. theaters installed the equipment.

As U.S. antitrust legislation forced studios to innovate or raise the standards of cinema, studios decided to tackle the sound quality issue by recording on a 360-degree soundtrack on six channel magnet. It wasn’t until the early 70s when Ray Dolby adapted a noise reduction technology developed for multi-track recorders for cinema sound. Dolby understood that theatre owners would only purchase audio equipment that was sufficient enough to justify the level of investment its product demanded.

Dolby’s solution to audio quality Credit: Local695

The standard practice for encoding Dolby mag masters was to create separate four-track masters for dialogue, effects, and music, which were encoded with left, central, right, and surround sound.

Having four-track masters that separate the sounds and effects in a film allows editors to sound cut whenever location dialogue is not salvageable. This practice is still in modern filmmaking, but filmmakers have found other uses for dubbing as well. By removing the dialogue track master, foreign studios could add a new layer of dialogue in any language and mix it into the original film.

Why Is Dubbing Important?

Dubbing can increase the reach of a project. If a film wants to be released internationally to be exposed to a wider audience, then the film will need to be dubbed into the language of the country it will be released in.

Subtitles can expand a film’s reach just as much as a dubbed version of the film can, but there are limitations to subtitles. For one, some countries prohibit a film from being shown in its original language. Another reason is that some audience members can find the subtitles distracting from what is happening on screen, or they are unable to read the subtitles fast enough to understand what is happening in the story. Many children’s programs and films are dubbed because children are not going to read subtitles.

Another reason that dubbing is important is in cases when the original audio isn’t salvageable. Dialogue may have been unclear or inaudible in a long-distance shot or from accidental air traffic, background noises, or the microphone was unable to pick up the actors’ voices. In these cases, dialogue can be recorded after filming and audio mixed into the film without the audience missing out on any dialogue.

How to Dub a Movie or Show

Audio dubbing requires planning, especially if you are translating dialogue from another language.

The process begins when a video master is sent to the localization provider. The video master will include a script, the video, and a sound mix for the project. The script is then time-coded, the reference number given to a specific point in time within a media file. If a script is not sent, the provider will transcribe the dialogue into a written text.

A script is created by a translator and scriptwriter. They will sit down and write the script in whichever language they are translating to based on the dialogue in the project. The translator has to keep in mind the linguistic differences while translating to keep the dialogue more conversational rather than an awkward direct translation that Google Translate would provide.

The translator and scriptwriter, sometimes the same person, will try to find the best words that preserve the tone of the dialogue and scene, the performances already established in the project, and the themes of the story while finding words that match up with the lip movements of a character. Bad syncs between the character’s lip movements and the dub can be very distracting to the audience.

Translating editors are the glue to dubbing and subbing foreign movies.Credit: Dubbing King

The translator will know how to create scene timestamps for every original scene in the project to help the voice actors know the time where different dialogues take place and how long the lines are so they don’t end up having dialogue that is too short or too long.

While the script is being translated, voice actors will need to be cast to perform the script. Finding voice actors who are fluent in the language that the project is being dubbed in is very important. The voices should sound similar to the original actors’ voices or sound as if they could be the character on screen. If the original actor or character has a deep voice and looks like they should have a deep voice, then cast a voice actor who can do a deep voice. The dub needs to sound natural and match the tone of a character’s voice and performance.

During the recording, the director and a sound engineer will work closely with the voice actor to ensure that the world choices are synchronized with the lip movements of a character. Typically, voice actors will not get their lines until they arrive on set, so the director will have them do a cold read, then provide tips on how to improve the line. The sound engineer is there to make sure the dialogue fits the scene, and that the voice actor is saying enough words to make the dialogue look natural.

Once voice actors have recorded their lines, it is time to edit the dub into the existing audio track. A new audio track will be mixed with the existing audio by a sound engineer and editor during post-production. Editors will have to adjust the timing of the dub in the scenes by slowing down or speeding up a screen so the new audio matches.

Dubs Rise in Popularity

Dubbing has always been popular in filmmaking, but the use of dubbing foreign-language films found popularity in the late 1930s and 40s. With European countries swept up in ideas of nationalism, citizens of those countries were limited to films and entertainment that were in their country’s national language.

Many foreign films that were viewed in these extremely nationalistic countries like Nazi Germany, Mussolini’s Italy, and Franco’s Spain were subjected to script changes that aligned with their respective country’s ideals and dubbed because of a distaste toward foreign languages.

The Soviet Union used dubbing as a part of its communist censorship programming which lasted until the 1980s. During these times, previously banned films started to flood into the country under the form of low-quality, home-recorded videos with one voiceover dub speaking as all of the actors in the film. Surprisingly, this one-voice-over dub is still common practice in Russian television with only films having a budget big enough for high-quality dubs.

Now, many countries have been democratized, but the roots of nationalism are still in place. France has the Toubon Law, which prohibits the import of foreign films unless it is dubbed in French. Austria has the highest rejection rate of subtitles in the world, with more than 70% of their audiences preferring dubbed over subbed.

Even though English and French are the national languages in provinces of Quebec, all U.S. films are required to be dubbed in French.

America’s Disdain Towards Dubs

While most Asian and European countries have a general attitude that dubbing is good, the general moviegoer and streaming audiences in the U.S. are opposed to dubbing for philosophical and artistic reasons.

Older audiences were exposed to bad, out-of-sync dubs of Asian and European films in the 70s and 80s and haven’t been exposed to good dubs, and a mass amount of current American moviegoers haven’t seen much foreign-language content like most of the world. With films like The Wailing and Parasite finding massive success in American cinema, the average moviegoer prefers to preserve the artistry of the original film by reading the subtitles.

This new exposure to foreign entertainment found on streaming services has created a new disdain toward dubbing for American audiences.

Much of foreign entertainment provides dubbed and subbed options for American viewers to provide an opportunity for those opposed to the other to watch the shows or film however they prefer. As the U.S. is exposed to more and more foreign-language series and films, that dislike towards dubs may fade.

Dubbing in Anime

The sub vs. dub debate is a hot-button topic for anime fans. Unlike live-action movies and shows, animation allows voice actors freedom to perform without too many constraints. The character’s mouth movements do not have to line up perfectly with the audio, and the translator reinterprets the show for an English or other audience.

The problem with subtitles is that they are condensed versions of what the characters are saying to one another because they need to be able to fit on screen. The translations are not always perfect, and what is being said is normally chopped down into digestible bites that a viewer can read quickly. Subtitles can take someone out of the scene by forcing you to keep reading instead of watching.

Some people also reported that they retain information better when hearing it rather than reading it. Hearing dialogue in a show will stick with them longer.

One of the main problems found in dubbed anime is censorship. American-dubbed anime has a younger target audience, so scenes, suggestive dialogue, or anything deemed as taboo in the U.S. alters the content to be “suitable” for the viewer. Goku in the dubbed version of Dragon Ball Z sounds like a grown man with a deep voice but acts like a child. In the Japanese version of the anime, Goku sounds like a child. This was an obvious character choice made by the creators of the show.

The main reason viewers do not like dubbed anime is that the dubbing seems “off.” It is a weird anomaly that exists because American audiences are not familiar with foreign shows, films, or content. As viewership grows for these shows, then the budget to dub will increase and provide the showrunners with more experienced voice actors, directors, and editors.

I don’t have a preference and normally listen to both the sub and dub version of any anime I start to see which I like best.

How to Avoid a Bad Dub

Dubbing a film or show can be tricky. The last thing a filmmaker would want is for a dub to distract the audience from the story.

Most of the time, voice actors and directors are working on the dubs with scripts that were written the night before since the turnaround time for dubs is so quick. Many of the final lines that make it into the final dubbed version of a foreign project are cold reads since voice actors don’t always get to see the script ahead of time.

Sometimes, dubbing can be very jarring and out-of-sync with a film or series can create scenes that are awkward, badly synchronized, and just feel jarring to watch. Check out this scene from Squid Game to see what I mean:

Video is no longer available: youtu.be/MHkrjL0gm3M

While it isn’t the worst dubbing in the world, there is something that feels off about the entire scene. Part of it has to do with the fact that native English speakers can see that the synchronization is off and that the diction of the English voices does not match up with the actors’ performance.

Although the voice actor is limited to their performances with live-action projects, a voice actor should be able to imitate an actors’ performance through their voice by watching the original actors’ gestures, nuanced facial movements, and lip movements when they speak. Voice actors are typically alone in a recording booth, unable to hear the other actors’ performances. The voice director will give tips to help mold the performance to their liking so the dubbed voices feel as natural as possible since they know what all of the other voices will sound like in the finished project.

What Makes a Good Dub?

A creative and technical process requiring talent and time is what makes a good dub. The turnaround time for a feature-length movie is usually about six to 12 weeks. This time includes rewriting the script into another language, recording, and sound mixing.

Finding good performances that sync up to the project requires experienced performers and directors. It takes longer to get a good dub from voice actors and directors who have little dubbing experience.

Sound engineering and mixing are also components to having a good dub. Dubbed voices can sound like they were recorded on location and blended into the soundtrack.

Check out this clip from Money Heist to see how the dubbed voices are mixed well into the show’s actions and other sound effects:

If you are wanting to get experience in dubbing, then here are some of the best kinds of software you can download.

Adobe Audition CC: Adobe Audition CC is one of the best audio dubbing programs on the market. Users of the software have enormous audio editing power from editing, mixing, and creating crisp audio production that will improve the quality of any video, film, show, or short. You can insert audio tracks into the desired video clips, add sound effects, cleanup, and audio restoration while having access to a wide variety of tools to edit and modify audio tracks.

WavePad: There is also WavePad, a software that allows you to record audio and import a wide range of audio formats including gsm, vox, WMA, Ogg, Flac, MP3, and more. WavePad allows you to edit audio, reduce noise, restore audio, and has a wide range of audio effects that are easy to use. For longer projects, WavePad offers a bookmark function so you never lose your place.

Magix Music Maker: This software is perfect for anyone who is just getting into sound mixing. Magix Music Maker features a visually engaging interface and system that is easy to navigate. Its simple design is supported by its powerful editing tools. Many professional sound engineers use this software because of its simplicity while still being able to perform the same functions as the best software used for dubbing.

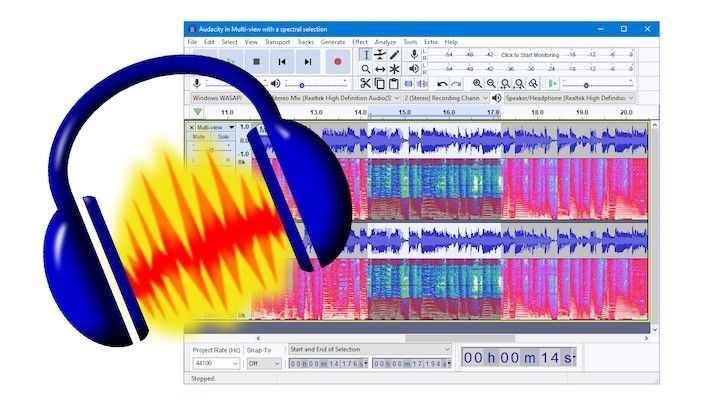

Audacity: Audacity is a free audio dubbing tool that both amateurs and professionals use. The interface is plain and simple to use but makes up for the lack of flare with its functionality. The audio editing program offers many features like managing multiple recording tracks, voice levels, and recording from microphones or multiple channels.

Audacity software Credit: Prosound Network

Without the discovery of dubs and synchronizing sound to motion pictures, cinema could have been shown in silence up to this very day. Dubs have forever changed the course of sound in film and television by pioneering the way for better audio quality. We must appreciate dubs’ impact on modern cinema instead of bashing it for its inability to sync with an actors’ lip movements and realize that dubs are preferred and more inclusive.

Next time you watch a series or film, turn on the dubs. You may find a better way to translate the script or mix the audio better to make the dub voices sound natural. Practice how you would direct a voice actor or mix the audio to make a better dub.

As more and more foreign content is finding popularity in the U.S., the demand for voice actors, voice directors, and sound engineers will increase, and you might find yourself working on those projects with your newfound knowledge of dubbing.